Like many environments, we run a few long-lived Hadoop clusters in our lab for doing testing of various feature and functionality scenarios before they are placed in a production context. These are used as big sandboxes for our team to play with and do development upon. Today, we encountered a strange Hive Metastore error on one environment that we had not previously run across: table creations would throw RPC errors from both Hive and Impala.

The Cluster: A Background

In this particular cluster, we utilize Cloudera Manager 5.10 to maintain a very straight forward CDH installation. It has Impala, Hive, YARN, Kudu, and a few other things to support the handful of engineers using it as a sandbox. Because this is a test environment, we don’t run it in High Availability mode, nor do we have security enabled (although, we do have other testing environments for those features).

Simple enough.

The Cluster: A history of names

At some point in the last six months, we underwent a change in how we manage clusters, necessitating a redesign of the DNS architecture to make it easier to allow different groups to self-service their DNS hostname creation requests. As part of this effort, we had to walk through all of our clusters changing hostnames to reference the new, correct subdomain for each cluster. We went from having a general subdomain where everything lived (i.e. hadoop.example.net) to having cluster subdomains named by cluster (i.e., cdhhive.example.net). This is usually a straight forward process and Cloudera provides good documentation to walk you through renaming hosts.

The Error

ImpalaRuntimeException: Error making 'createTable' RPC to Hive Metastore: CAUSED BY: MetaException: java.lang.IllegalArgumentException: java.net.UnknownHostException: node1.hadoop.example.net should be cdhhive, not hadoop.example.net

The error implied an obvious (to us) issue: a configuration file somewhere must have the wrong hostname still listed from our attempts earlier in the year to rename the cluster nodes. Unfortunately, looking through all the configuration options for Hive, Impala, and various other components controlled by Cloudera Manager led us to believe that none of the properties were mis-configured. So what was it?

Further down the debug hole

Isolating the configurations leads us down a few paths:

- The client configurations in use by the user may be wrong.

- The old DNS configuration is cached somewhere, such as in running daemons.

- The old DNS configuration is still referenced somewhere outside of Cloudera Manager and CDH, such as in the host’s /etc directory.

- The old DNS configuration is still referenced within the Impala statestore.

- The old DNS configuration is still referenced within the Hive Metastore.

Wrong client configuration?

The first thought was that the user had a locally cached set of client configurations referenced by beeline, hive, or impala-shell. This was easily dispelled: the user was working with impala-shell, which connects to a specific daemon. Additionally, we verified that all nodes on the cluster were showing the latest client configurations both in CM as well as on the nodes themselves.

Strike out, the first.

Wrongly cached DNS in running daemons?

We had done the host renames months back and all of us were sure that the cluster had been restarted multiple times since then. Just to rule it out, we shut down the cluster and started fresh to make sure none of the daemons had run from before the rename. This changed nothing.

Strike out, number two.

Wrongly referenced DNS names in /etc?

We thought that an OS-level file in /etc (such as /etc/hosts) may be confusing things on either the edge node we ran impala-shell from, the node running Hive Metastore, or the node running the Impala daemon we were connecting to. We ruled these out by validating all the files in /etc referenced the correct names, as well as trying to run the impala-shell from various hosts and to various Impala daemons. All hosts showed the same behavior.

Strike out, number three.

Wrongly cached DNS in Impala statestore?

This was a stretch. The statestore should only care about what’s now running on the cluster. Restarting the entire cluster, including all Impala daemons would have reset this.

Strike out, number four.

Wrongly referenced DNS in Hive Metastore?

Here is where it got interesting.

The easiest way to check for old host names in the Metastore would be to dump the database and look through it. We use PostgreSQL for the database backend, so it would be a simple matter of running pg_dump. Because we only run the “embedded” instance within Cloudera Manager in our sandboxes, we will need to run this from the Cloudera Manager node.

$ pg_dump -h localhost -p 7432 -U hive -a hive | grep -c hadoop.example.net 220

Bingo! We had 220 references to the old hostnames somewhere within the database in one or more tables. Digging around in the Hive Metastore dump, it appeared that we had references in three different tables: TABLE_PARAMS, DBS, and SDS.

Examples from each table:

TABLE_PARAMS

This table defines different parameters for the table.

hive=> select * from "TABLE_PARAMS" where "PARAM_VALUE" similar to '%hadoop.example.net%'; TBL_ID | PARAM_KEY | PARAM_VALUE --------+-----------------------+----------------------------------------------------------------------------------------------------------------- 26003 | avro.schema.url | hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/testing.db/operating_system_type/.metadata/schemas/1.avsc

SDS

This table defines the locations of the tables (and possibly various partitions).

hive=> select "SD_ID", "LOCATION" from "SDS" where "LOCATION" similar TO '%hadoop.example.net%'; SD_ID | LOCATION --------+-------------------------------------------------------- 18480 | hdfs://node1.hadoop.example.net:8020/user/alice/testing

DBS

This table defines the location to the database directory.

hive=> select "DB_ID", "DB_LOCATION_URI" from "DBS" where "DB_LOCATION_URI" similar TO '%hadoop.example.net%'; DB_ID | DB_LOCATION_URI -------+----------------------------------------------------- 1 | hdfs://node1.hadoop.example.net:8020/user/hive/warehouse

Ok, now what?

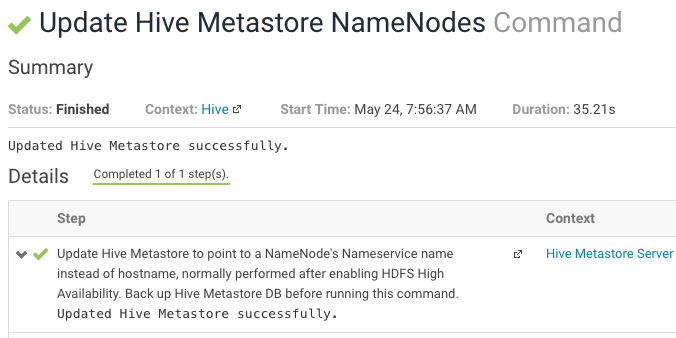

Now that we have an idea of what’s going on, how do we fix it? Well, reading through the documentation for host renames, we saw that there’s a step called “Update Hive Metastore Namenodes“. This is supposed to be run in the event that the nodes you’re renaming include the NameNodes for the cluster. We weren’t sure if this was run, so we attempted to do it again. This step requires that the cluster be completely disabled and turned off before Cloudera Manager will enable the option.

In theory, this command will run through the Hive Metastore and update all references of the old NameNode name to the current one (i.e. node1.hadoop.example.net to node1.cdhhive.example.net).

Running it showed success.

Great! Or so we thought. Attempts to re-issue the table creation continued to fail with the same error and looking through the database showed that the names in the HDFS paths were not getting updated.

A deeper look at the logs showed something curious: the wrong name was picked up for part of the work, so it only attempted to set the new hostname on entries that already used the new hostname for the NameNode.

Updating Hive Metastore to use location hdfs://node1.cdhhive.example.net:8020 List FS Root output: Initializing HiveMetaTool.. Listing FS Roots.. hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/testing.db hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/foo.db hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/bar_tutorial.db hdfs://node1.hadoop.example.net:8020/user/hive/warehouse hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/shopping_demo.db hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/analytics_demo.db hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/hive_dev.db hdfs://node1.hadoop.example.net:8020/user/hive/warehouse/sentry_demo.db hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/kudu_test.db Old FS Root: hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/kudu_test.db

And when we look further down the log where it updates the individual tables, we see that it only touches entries that had already used the correct host name.

Initializing HiveMetaTool.. Looking for LOCATION_URI field in DBS table to update.. Successfully updated the following locations.. . . old location: hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/kudu_test.db new location: hdfs://node1.cdhhive.example.net:8020/user/hive/warehouse/kudu_test.db Updated 3 records in DBS table

Super bizarre. So what to do?

The Hive Metastore Fix is In!

Our next option was to update the database entries by hand. But, because there were hundreds of entries, we didn’t want to update each row-by-row. A little googling and testing brought us the following three SQL statements that we ran, which allowed us to update all references correctly. Note: there may be other tables in your environment! This was an exhaustive fix for our environment, but YMMV.

update "TABLE_PARAMS" set "PARAM_VALUE" = replace ("PARAM_VALUE", 'hadoop.example.net', 'cdhhive.example.net') ;

update "DBS" set "DB_LOCATION_URI" = replace("DB_LOCATION_URI", 'hadoop.example.net','cdhhive.example.net') ;

update "SDS" set "LOCATION" = replace("LOCATION", 'hadoop.example.net','cdhhive.example.net') ;

Once this was done, we fired up Hive and Impala on the cluster and tested our table creation: success! The new table was created.

The Aftermath

Thinking through this, we’re still not sure how this could have occurred (other than a possible bug in Cloudera Manager). My best theory is that Hive and Impala managed to stay up and running through the host renames, allowing this weird condition to occur where old references to the hostnames worked because the hostname and IP address were cached. We had entries in the various location columns that referenced both the old and new addresses. At some point, the Hive Metastore may have crashed or been restarted, causing the problem to arise.

Funny enough, if we had enabled High Availability, I’m reasonably sure this problem would never have occurred because the various HDFS paths would have been stored using the HDFS High Availability name service name and not the real name of the NameNode.

You must be logged in to post a comment.